Location Information Collection – Creepy?

We are sharing a lof of data online everyday as part of our online activity. A lot of it is passive information that is required for the services we use. Increasingly there is however, also an increasing amount of actively shared personal information, like the location information we pass on via social networking sites for example.

With people getting more and more familiar and used to these services, increasingly they are willing to share the location as an addon. This is on one hand fun and interesting for a small circle of friends, but there are certain services that completely bult around the concept of sharing the location such as Foursquare, Facebook Places, Google Latitude or Gowalla. It does not work without the location being shared. Further more they are all public in the sene that what ever you share and do using this services is visible and accesible for everybody with internet acces and computer skills.

More over it si not only visible but accessible as a dataset that can be downloaded, mined and manipulated. In this sense these services are generating a lot of data that remins useable for a long way after the initial services has been delivered. Even after you have checked in to this restaurant, earned the points and gained the Mayorship (foursquare) the information that you have been there at this time and location remains in the database.

In this sense each and every single step can be retraced by mining the service providers database.The providers are via the API encouraging developers and users to do so.

This is of course for many fields a extremely interesting data pool. Via these large social, temporal, location dumps vast networks of social interaction and activity can be recreated and studied. Interests range from marketing to transport planning or from banking to health assessment. It is still unclear whether these large datasets are actually useful, but currently they represent the sort of state of the art, new type of insight generator. They are a promise to new growth as a sort of massified data generator.

It is however still tricky to actually do the data mining. Accessing the data via the API, setting up a data base managing the potentially huge amount of data and then aso processing the data requires a specific set of computer skills. It is not exactly drag and drop. However, there are third party applications popping up who provide these services. Especially in the case of Twitter numerous services provide a data collection service, such a s 140kit or twapperkeeper.

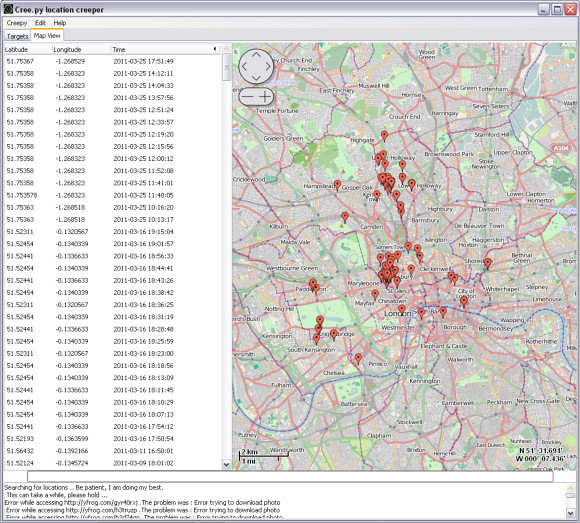

Creepy is a new tool allowing for to search on multiple services at the same time. This is a new add on and brings in an additional dimension. Allowing to mine numerous services at once of course can create quite a detailed picture of individual activity, since each service can be used quite specifically for different purposes. And with the habits of most internet users to use same acronyms and user names it might be rather simple to cross identify activities on youtube, twitter, facebook and fickr.

What Creepy can do for you is find all the locations stored on any of the services via the username. You can put in a username and the application goes off to crawl the sharing sites via their API’s and brings back all the location tags ever associated with this name, This can be tweets, check ins or located images. Regarding these images this is especially tricky since this to some extend trespasses the location sharing option. Even if a user does not share the location on twitter for example, the uploaded image does maybe contain the location in the EXIF data. Similar on flickr or other photo sharing sites.

The tool is stepping in, to some extend, for what pleaserobme.com was fighting. It is about rising the awareness of ethical and privacy considerations addressing mainly the wider public, the users directly.

The Creepy service was developed by Yiannis Kakavas. He explains the purpose of his tool to thinq as twofold “First, to try and raise awareness about privacy in social networking platforms. I wanted to stress how ‘easy’ it is to aggregate all the seemingly small and innocent pieces of data people are sharing into a ‘larger picture’ that potentially gives away information that users wouldn’t think of sharing. For example, where do they live, where do they work, where and at what times they are hanging out, when they are not at home et cetera. I think that sometimes it is worth ‘scaring’ people into being more careful on how much they share online. Secondly, I wanted to create a tool for social engineers to help with information gathering. I believe Creepy can be of real use to security analysts performing penetration testing for the initial process of gathering information about the ‘targets’ – information that can be used later for a number of purposes.”

Image taken from ilektrojohn / Screenshot of the Creepy interface to start searching for user names.

The app is available for download on linux HERE and for windows HERE. A mac version is being worked on at the moment. The interface allows for different routes. Directly via username, this can be either a twitter user name or an flickr user name. It also offers the option to search for user names first by entering other details, like a full name. This however requires identification via the twitter server first. So it is not all anonymous as such.

There is a time limitation on the twitter data though. Twitter only serves results a few month back and not the whole data set. So your activities registered on twitter two years back should be save, especially if you have been tweeting like mad recently. Creepy also offers the option to export the found locations either as a csv or a kml file. Quite handy that is. The details creeply brings back are the location, the time and the link to the original content for following up. In the case of twitter this is the url of the original message showing the text.

Image by urbanTick / Screenshot of the Creepy app running on windows showing results for ‘urbanTick’ based on location from twitter messages.

Via thinq