New Paper: User-Generated Big Data and Urban Morphology

“This cutting edge special issue responds to the latest digital revolution, setting out the state of the art of the new technologies around so-called Big Data, critically examining the hyperbole surrounding smartness and other claims, and relating it to age-old urban challenges. Big data is everywhere, largely generated by automated systems operating in real time that potentially tell us how cities are performing and changing. A product of the smart city, it is providing us with novel data sets that suggest ways in which we might plan better, and design more sustainable environments. The articles in this issue tell us how scientists and planners are using big data to better understand everything from new forms of mobility in transport systems to new uses of social media. Together, they reveal how visualization is fast becoming an integral part of developing a thorough understanding of our cities.”

|

| Table of Contents |

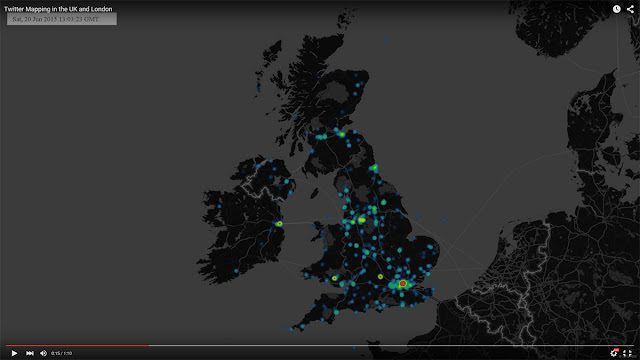

“Traditionally urban morphology has been the study of cities as human habitats through the analysis of their tangible, physical artefacts. Such artefacts are outcomes of complex social and economic forces, and their study is primarily driven by traditional modes of data collection (e.g. based on censuses, physical surveys, and mapping). The emergence of Web 2.0 and through its applications, platforms and mechanisms that foster user-generated contributions to be made, disseminated, and debated in cyberspace, is providing a new lens in the study of urban morphology. In this paper, we showcase ways in which user-generated ‘big data’ can be harvested and analyzed to generate snapshots and impressionistic views of the urban landscape in physical terms. We discuss and support through representative examples the potential of such analysis in revealing how urban spaces are perceived by the general public, establishing links between tangible artefacts and cyber-social elements. These links may be in the form of references to, observations about, or events that enrich and move beyond the traditional physical characteristics of various locations. This leads to the emergence of alternate views of urban morphology that better capture the intricate nature of urban environments and their dynamics.”

Keywords: Urban Morphology, Social Media, GeoSocial, Cities, Big Data.

|

| City Infoscapes – Fusing Data from Physical (L1, L2), Social, Perceptual (L3) Spaces to Derive Place Abstractions (L4) for Different Locations (N1, N2). |

|

| Recreational Hotspots Composed of “Locals” and “Tourists” with Perceived Artifacts Indicating “Use” and “Need”. (A) High Line Park (B) Madison Square Garden. |

|

| Moving from Spatial Neighborhoods to Geosocial Neighborhoods via Links. |

|

| The Emergence of Geosocial Neighborhoods after the in the Aftermath of the 2013 Boston Marathon Bombing |

Full Reference:

Continue reading »Crooks, A.T., Croitoru, A., Jenkins, A., Mahabir, R., Agouris, P. and Stefanidis A. (2016). “User-Generated Big Data and Urban Morphology,” Built Environment, 42 (3): 396-414. (pdf)